How to Measure the Performance of Training Programs

Jill W.

According to the ATD 2016 State of the Industry report, organizations spend an average of $1,252 per employee on training and development, and the average employee receives 33.5 hours of training per year.

These numbers indicate that training is an essential component of most organizations. They also suggest that we need to shift our focus away from measuring 'how much' and more towards 'how well'. How do we know if a training program is effective? Are employees actually learning? Is employee performance improving?

This article will highlight three key ways that can help you measure the effectiveness and performance of your training: measuring engagement, observing social ownership, and using metrics. I will begin by introducing you to the Kirkpatrick Model, a worldwide recognized standard for evaluating the effectiveness of training.

Kirkpatrick's Four Levels of Learning Evaluation

The Kirkpatrick Model takes into account all types of training and can be applied to both formal and informal training.

The model consists of four levels of evaluation:

- Level 1 - Reaction

- Level 2 - Learning

- Level 3 - Behaviour

- Level 4 - Results

1. Reaction - Where the reaction of learners is measured

Level 1 - Where the reaction of the learners is measured; what they thought and felt about the training. Did learners like and enjoy the training? Did they consider the training relevant? Was it a good use of their time? Did they like the venue, style and timing of the training? What was the level of participation? What level of effort was required to make the most of the material?

You are ultimately looking for learner satisfaction. Examples of tools you can use to measure reaction are 'smiley sheets', feedback forms, verbal reaction, post-training surveys or questionnaires and online evaluations. What you are looking for is a positive reaction when people describe their training experience to you.

2. Learning - Where knowledge from before to after the learning experience is measured

Level 2 - Where the increase in knowledge or intellectual capability from before to after the learning experience is measured. Did the learner learn what was intended to be taught? Did the learner experience what was intended for them to experience? What is the extent of advancement in performance or change in the learner after the training?

Typically, learning is assessed using a pre-test/post-test method. Learners can be given a written exam to assess knowledge or an on-the-job practical evaluation.

3. Behaviour - Where transfer is measured

Level 3 - Where the extent to which the learner has applied the learning and/or changed their behaviour on-the-job, also known as knowledge transfer, is measured. Did the learner put their learning into effect? Were relevant skills and knowledge used? Is the learner able to transfer what they learned to other situations or to other colleagues?

Examples of tools you can use to measure behavioural changes include ongoing assessments, observations and interviews combined with feedback. Self-assessment can also be useful as long as you have identified a clear criteria and measurement standards.

4. Results - Where the effects of improved performance is measured

Level 4 - Where the effect of improved performance on the business, organization or environment is measured. Generally, key performance indicators are evaluated and reported on overtime.

As suggested by Jeffery Berk, "the Kirkpatrick model is nice, but without a process to measure these levels it might not be practical".

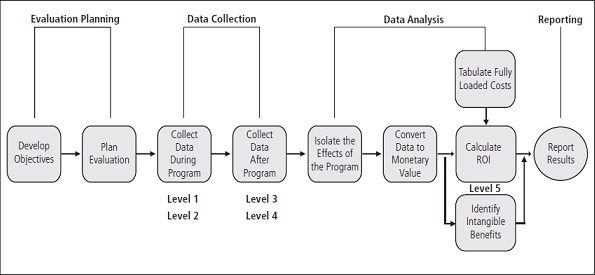

Dr. Jack Phillips, who I had the pleasure of meeting last year at the International Society for Performance Improvement (ISPI) conference, added a fifth level - Return on Investment (ROI). ROI compares the monetary benefits from the program with the program costs. Dr. Phillips also built a process to measure Kirkpatrick's four levels.

The diagram below illustrates his methodology. Additional information can be found on the ROI Institute of Canada's website or by reading the article Measuring the ROI of eLearning written by Jill Walker.

Figure 1. The Phillips ROI Methodology - Source: ROI Institute.

Three Ways to Evaluate Training Initiatives

1. Measure Learner Engagement

Training evaluation and learner engagement are closely related. It's great to know about successful completion rates, however, if a learner doesn't engage with the training, or, more accurately, if the training doesn't engage the learner, it won't have any impact, and the learner is less likely to retain and/or transfer the learned information back on-the-job.

As you've seen from the Kirkpatrick Model, the first thing you should measure is reaction. Often, this stage is overlooked, and executives looking at ROI may be tempted to downplay the importance of this stage. However, I'd argue that this stage may in fact be the most important because without engagement, learning doesn't occur, behaviours don't change and performance doesn't improve.

Along with completion rate, Juliette Denny identifies four things to consider when measuring engagement:

- Frequency of logins - are learners voluntarily logging in or are they constantly being reminded? Which areas of your training content do learners engage with the most quickly? Which group of learners is more active on your learning platform?

- Self-led learning - How many learners access optional or recommended learning resources?

- Asking questions - how often are learners posting on forums? Opening communication channels helps you to capture the informal learning that frequently happens. Further, if learners are asking 'clarification-type' questions, it's possible your training program isn't complete.

- Content creation - are learners adding their own content? Are they sharing things they learned with other users via forums and discussion boards?

2. Observe Social Ownership

One of the surest ways to assess mastery is through teach back; having the learner teach what they've learned to someone else. The idea of social ownership follows this principle.

John Eades talks about social ownership in his blog on measuring training effectiveness. He suggests that "social ownership puts learners in the position to teach others by showing how they apply concepts in the real world".

This concept has two advantages for training managers.

- Employees engage by learning from each other and,

- It provides the opportunity to measure how concepts being taught in training are being implemented within the organization.

3. Use Learning Analytics and Metrics

Generally, the goal of training is twofold: to improve employee performance through skill development and to see a return on investment (ROI). Data driven learning analytics and reporting helps organizations link training performance to essential business results.

There are several metrics that you can use to evaluate your training initiatives, including:

- Change in performance ratings over time

- Customer/client satisfaction ratings

- Employee engagement

- Employee turnover rates

- Percentage of promotions

- Productivity rates over time

- Employee retention rates

This list is not exhaustive, but it does give you an idea of where you can start. As you work with the analytics that are derived from these metrics, it's important that you have a plan in place to act.

Conclusion

Measuring the effectiveness and performance of your training programs can be overwhelming at times. Most people immediately think about ROI, however there are other things that you can look at.

A good place to start is with the Kirkpatrick Model. You want to measure reaction, learning, behaviour and results. But also consider learner engagement, social ownership and learning analytics. Having the information is great, but how, based on your experiences, do you solve challenges or issues that arise?

TopicsChoose Topic

📘 Ready to Elevate Your Learning Strategy?

Explore our comprehensive library of eBooks and tools on learning resource development, competency-based learning, and LMS implementation. Transform your training programs with insights from industry experts and practical templates.

Jill W.

Jill is an Instructional Designer at BaseCorp Learning Systems with more than 10 years of experience researching, writing and designing effective learning materials. She is fascinated by the English language and enjoys the challenge of adapting her work for different audiences. After work, Jill continues to leverage her professional experience as she works toward the development of a training program for her cats. So far, success has not been apparent.