Building Meaningful eLearning Assessments: Summative Online Test Questions

Jill W.

What color is the sky? Everyone knows the answer is blue. Except when it's grey. Or indigo with some pinky bits near the horizon. It's a seemingly simple question, but it's not a valid assessment item unless you can be certain of the time and weather wherever learners might be answering.

Writing effective assessment questions is harder than many people realize. This article will review strategies for building fair and meaningful eLearning assessment items.

Selecting the Right Type of Assessment

The most common eLearning assessment, and the type I'll focus on here, is a computer-graded quiz consisting of some variety of multiple-choice (MC) questions: straight MC, True/False, Yes/No, drag-and-drop, matching, or similar. If that's what you intend to develop, first make sure it's an appropriate format for evaluating the project's learning objectives.

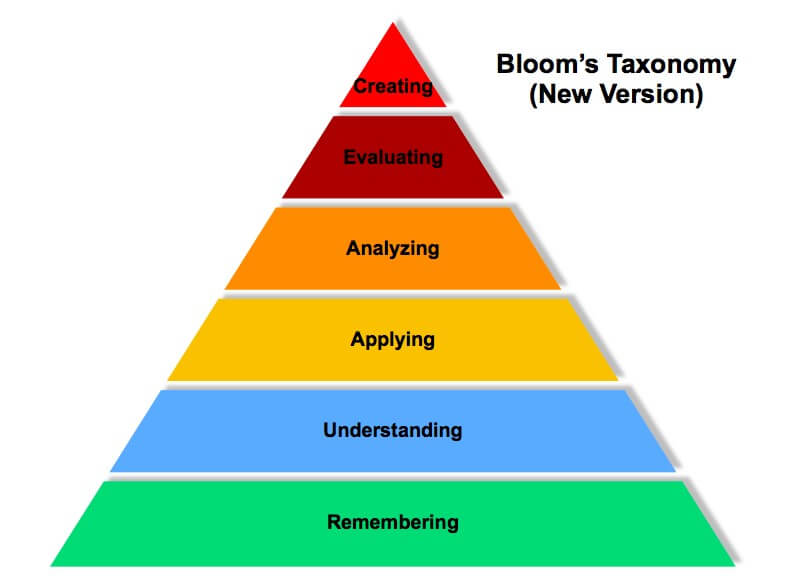

Benjamin Bloom's taxonomy of educational objectives divides objectives into three categories: the cognitive or thinking domain; the affective or feeling domain; and the psychomotor or physical domain. Each category is further divided into several levels. Bloom's original list of cognitive levels has since been updated, and the current hierarchy is:

Image retrieved from Learning Theories

Multiple choice exams are most suitable for learning objectives that fall into the first four levels of the cognitive domain: remembering, understanding, applying and analyzing. Questions or tasks requiring evaluating and creating may have an infinite number of correct responses, not the single, predictable response required by a multiple-choice question.

MC questions can also be used as part of the assessment for physical and affective domain objectives, but they do not constitute a valid assessment on their own in those domains. A psychomotor objective such as "Walk 10 metres on a tightrope" can't be fairly assessed on a computer; at some point, you have to get out the tightrope.

Let's leave the tightrope behind for now and return to MC assessments. Once you've established that it's the right type of assessment for your purpose, how do you make sure you create a good one?

Building Meaningful Assessment Items

1. Ask only those questions that are necessary to verify achievement of the learning objectives

There's a second problem with "What color is the sky?" as an assessment item. Not only is the answer variable, but, frankly, who cares? A module on the physical properties of light with a learning objective of "compare wavelengths across the color spectrum" might state:

The sky is blue because blue light has shorter wavelengths than other colors and is therefore scattered more widely by air molecules in the atmosphere.

It's all too easy for a busy Instructional Designer to spot this random fact in the material and promptly make a question of it, but even a "correct" answer reveals nothing about the learner's understanding of the physical properties of light. The question is meaningless as an assessment.

If you're wondering if a question is meaningful, refer to the learning objective. Does a person need to be able to answer the question to accomplish the objective?

2. Make sure there is a single, definitively correct answer

Questions that create confusion about the test writer's intention or offer more than one correct answer do more to confuse learners than evaluate them.

Consider this situation:

Learning content: The Respectful Workplace Policy prohibits bullying and harassment.

Question: The Respectful Workplace Policy prohibits harassment. T/F

It's quite true that the policy prohibits harassment, but I've seen such questions marked 'False' on the grounds that the policy prohibits both bullying and harassment.

Here's another scenario:

Learning content: Sales technique X has been shown to be effective. There is also evidence from several studies that it minimizes the time spent per client issue while maximizing sales transactions.

Question: Sales technique X has been shown to be:

- A. Effective

- B. Efficient

- C. Ineffective

- D. Time consuming

Answer A, Effective, will be chosen in this case because it matches the quote from the learning content. Yet any learner who read the second sentence will know that B, Efficient, is equally correct. A learner who merely remembers the content will have an advantage over a learner who understands it, which is not the intent of any assessment I've ever encountered.

The only time that more than one acceptable answer should be present is when learners are explicitly instructed to select the 'best' answer. Even then, test writers should make sure that the best answer is superior in some concrete way and not simply a more accurate quote of the learning content.

3. Include questions that assess higher-order cognitive skills

Returning to Bloom's taxonomy, remembering is not only easier to do than applying or analyzing, it's easier to test. Facing tight deadlines and demanding clients and managers, it's tempting to write up a quick memory-focused exam that pleases all the people who don't realize that it fails to assess any learning objectives requiring understanding, application or analysis.

'Best answer' questions and mini scenarios can be excellent ways to test higher orders of cognition. Instead of asking whether bullying violates the Respectful Workplace Policy, consider describing a situation or activity and asking whether it is in compliance.

4. Avoid trick questions

Setting the right level of difficulty is a skill that takes practice. Boosting the challenge level by adding a trick question or two might seem appealing, but unless your goal is to assess reading comprehension, it defeats the purpose of the assessment. "You should never, ever be trying to fail your learners."

A good way to make an assessment harder is to add more application and analysis questions. It might also be worth considering whether the assessment needs to be more difficult. eLearning assessments are often competency-based, meaning the goal is to determine whether learners have achieved the learning objective. Many people are more familiar with norm-referenced assessments, which seek to compare learners to each other and inevitably fail the poorest performers.

If your learning objectives are simple and straightforward, a simple assessment that most learners pass easily may be perfectly appropriate.

5. Good distractors are worth the time and effort to craft them

I remember being taught test-taking strategies in school. We were told that one answer to a multiple choice question was usually an unconvincing, throw-away option. You've seen the sort:

What do wild giraffes eat?

- A. Acacia leaves

- B. Acorns

- C. Lodgepole pine bark

- D. Green moon cheese

This practice fails the meaningfulness test. There's no value in knowing that giraffes don't eat green cheese from the moon.

Good distractors (wrong answer options) are plausible. They're also:

Grammatically correct

All options in a 'complete the sentence' question should make a complete, grammatically correct sentence. Watch out for "a" or "an" at the end of a stem.

Presented in a natural order, where applicable

When there's no natural order to the answer options, it's best to have them appear randomly. If the answers are numerical or chronological or fall into some other obvious pattern, it's better to list them from least (or earliest) to greatest (or last).

One thing to watch out for here is poor test engine design. The last thing you want is for the distractors to appear in order while the correct answer appears randomly. For example, if the correct answer is 2, you don't want to see:

- A. 1

- B. 3

- C. 4

- D. 2

In such a case, it's better to randomize all the numbers (or switch to a better test engine).

"All/none of the above" is used with care

Have you ever seen an assessment where 'all of the above' appears only when it's the correct answer? I have, on more than one occasion. If "all of the above" appears in your assessment, make sure it's available as both a correct answer and a distractor.

Some people recommend avoiding "all of the above" altogether. A learner who struggles with reading or is pressed for time might recognize the first answer as correct and select it without reading the rest. It's not a good test-taking strategy, but the goal is to assess learners' knowledge, not their reading comprehension or ability to write tests.

6. Answers to one question should never appear in another question

If I'm planning to ask what wild giraffes eat, I had better not ask: "What proportion of the wild giraffe diet is comprised of acacia leaves?" It's an easy mistake to make when you're pressured to drum up another eleven questions for the test bank.

After you've finished writing questions, read over the full list to see whether anything pops out at you. Better still, ask someone else to read it over to make sure you haven't given anything away.

7. Use learner results to assess your assessment

The learning cycle should never end. Once your assessment is up and running, take a look at learners' results and use the information to evaluate the assessment. Pay particular attention to questions that learners are answering poorly:

- Is the question well-written?

- Is it confusing?

- Is the learning material clear on that topic?

Conclusion

In this article, I explained when multiple choice assessments should be used. I described how to develop clear, meaningful questions and confirm their value. Do you agree with the points here, or have any of your own to add?

TopicsChoose Topic

📘 Ready to Elevate Your Learning Strategy?

Explore our comprehensive library of eBooks and tools on learning resource development, competency-based learning, and LMS implementation. Transform your training programs with insights from industry experts and practical templates.

Jill W.

Jill is an Instructional Designer at BaseCorp Learning Systems with more than 10 years of experience researching, writing and designing effective learning materials. She is fascinated by the English language and enjoys the challenge of adapting her work for different audiences. After work, Jill continues to leverage her professional experience as she works toward the development of a training program for her cats. So far, success has not been apparent.